Statistical Machine Learning

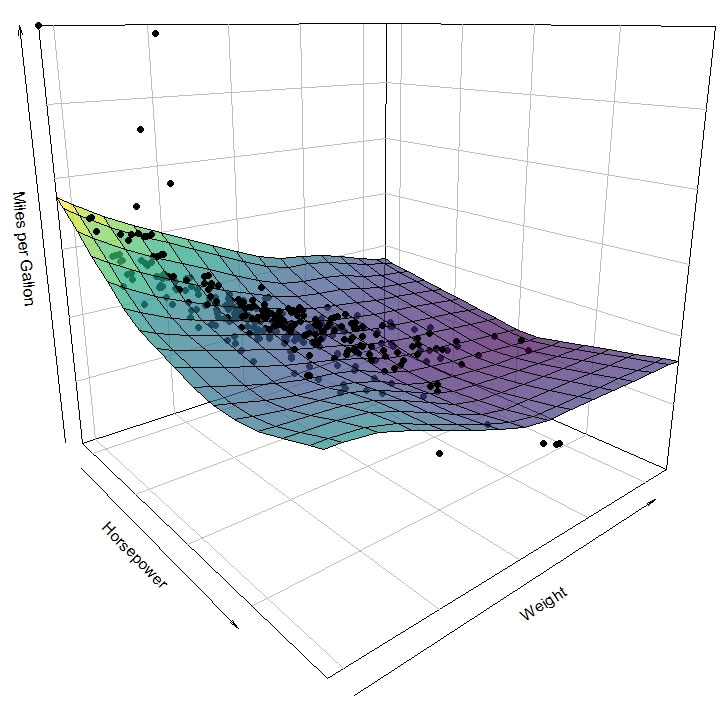

This course complements the Master's curriculum with models, methods and concepts mainly from the realm of machine learning. We will study intermediate to advanced models (e.g. trees, neural networks, ensembles), feature representations (e.g. kernels, adaptive basis functions) and strategies to ensure generalization (e.g. validation, regularization).

Participants are required to bring working knowledge in R or the willingness and effort to obtain that on the side. If you feel not comfortable with R, you may join the Bachelor module "Data Science I" in the first month of the winter semester to catch up (no credit).

Course Structure

This course is hands-on in that the weekly lecture is complemented by a weekly R Tutorial that we use to discuss, implement and practice the current topic.

Content

The content is still tentative an subject to change.

| Week 1 | Empirical Loss Minimization |

| Week 2 | Tree models |

| Week 3 | Features I: PCA |

| Week 4 | Features II: Clustering |

| Week 5 | Features III: Kernels & Adaptive Basis Functions |

| Week 6 | Neural Networks I |

| Week 7 | Neural Networks II |

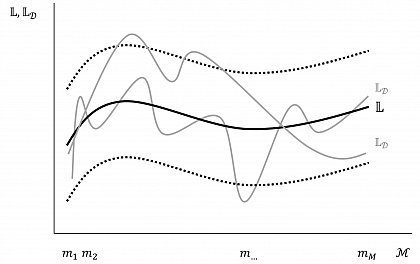

| Week 8 | Generalization, Statistical Learning Theory |

| Week 9 | Validation, Information Criteria |

| Week 10 | Regularization, Model Selection |

| Week 11 | Ensembles: Bagging |

| Week 12 | Ensembles: Boosting |

| Week 13 | TBD (Ensembles: Mixtures of Experts) |

| Week 14 | TBD |

| Week 15 | TBD |

Coursework

There will be a project + presentation due at the end of the semester. Details tba.